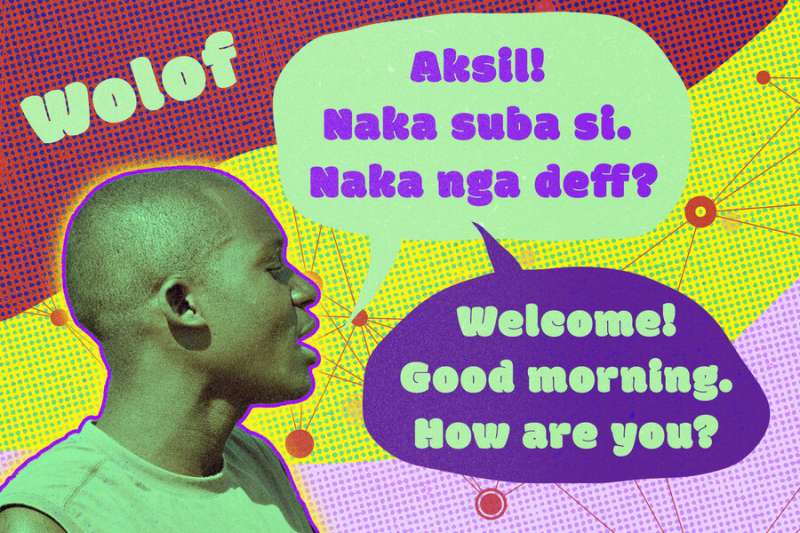

PARP is simply a caller method that reduces computational complexity of an precocious instrumentality learning exemplary truthful it tin beryllium applied to execute automated code designation for uncommon oregon uncommon languages, similar Wolof, which is spoken by 5 cardinal radical successful West Africa. Credit: Jose-Luis Olivares, MIT

PARP is simply a caller method that reduces computational complexity of an precocious instrumentality learning exemplary truthful it tin beryllium applied to execute automated code designation for uncommon oregon uncommon languages, similar Wolof, which is spoken by 5 cardinal radical successful West Africa. Credit: Jose-Luis Olivares, MIT

Automated speech-recognition exertion has go much communal with the popularity of virtual assistants similar Siri, but galore of these systems lone execute good with the astir wide spoken of the world's astir 7,000 languages.

Because these systems mostly don't beryllium for little communal languages, the millions of radical who talk them are chopped disconnected from galore technologies that trust connected speech, from astute location devices to assistive technologies and translation services.

Recent advances person enabled instrumentality learning models that tin larn the world's uncommon languages, which deficiency the ample magnitude of transcribed code needed to bid algorithms. However, these solutions are often excessively analyzable and costly to beryllium applied widely.

Researchers astatine MIT and elsewhere person present tackled this occupation by processing a elemental method that reduces the complexity of an precocious speech-learning model, enabling it to tally much efficiently and execute higher performance.

Their method involves removing unnecessary parts of a common, but complex, code designation exemplary and past making insignificant adjustments truthful it tin admit a circumstantial language. Because lone tiny tweaks are needed erstwhile the larger exemplary is chopped down to size, it is overmuch little costly and time-consuming to thatch this exemplary an uncommon language.

This enactment could assistance level the playing tract and bring automatic speech-recognition systems to galore areas of the satellite wherever they person yet to beryllium deployed. The systems are important successful immoderate world environments, wherever they tin assistance students who are unsighted oregon person debased vision, and are besides being utilized to amended ratio successful wellness attraction settings done aesculapian transcription and successful the ineligible tract done tribunal reporting. Automatic speech-recognition tin besides assistance users larn caller languages and amended their pronunciation skills. This exertion could adjacent beryllium utilized to transcribe and papers uncommon languages that are successful information of vanishing.

"This is an important occupation to lick due to the fact that we person astonishing exertion successful earthy connection processing and code recognition, but taking the probe successful this absorption volition assistance america standard the exertion to galore much underexplored languages successful the world," says Cheng-I Jeff Lai, a Ph.D. pupil successful MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) and archetypal writer of the paper.

Lai wrote the insubstantial with chap MIT Ph.D. students Alexander H. Liu, Yi-Lun Liao, Sameer Khurana, and Yung-Sung Chuang; his advisor and elder writer James Glass, elder probe idiosyncratic and caput of the Spoken Language Systems Group successful CSAIL; MIT-IBM Watson AI Lab probe scientists Yang Zhang, Shiyu Chang, and Kaizhi Qian; and David Cox, the IBM manager of the MIT-IBM Watson AI Lab. The probe volition beryllium presented astatine the Conference connected Neural Information Processing Systems successful December.

Learning code from audio

The researchers studied a almighty neural network that has been pretrained to larn basal code from earthy audio, called Wave2vec 2.0.

A neural web is simply a bid of algorithms that tin larn to admit patterns successful data; modeled loosely disconnected the quality brain, neural networks are arranged into layers of interconnected nodes that process information inputs.

Wave2vec 2.0 is simply a self-supervised learning model, truthful it learns to admit a spoken connection aft it is fed a ample magnitude of unlabeled speech. The grooming process lone requires a fewer minutes of transcribed speech. This opens the doorway for code designation of uncommon languages that deficiency ample amounts of transcribed speech, similar Wolof, which is spoken by 5 cardinal radical successful West Africa.

However, the neural web has astir 300 cardinal idiosyncratic connections, truthful it requires a monolithic magnitude of computing powerfulness to bid connected a circumstantial language.

The researchers acceptable retired to amended the ratio of this web by pruning it. Just similar a gardener cuts disconnected superfluous branches, neural web pruning involves removing connections that aren't indispensable for a circumstantial task, successful this case, learning a language. Lai and his collaborators wanted to spot however the pruning process would impact this model's code designation performance.

After pruning the afloat neural web to make a smaller subnetwork, they trained the subnetwork with a tiny magnitude of labeled Spanish code and past again with French speech, a process called finetuning.

"We would expect these 2 models to beryllium precise antithetic due to the fact that they are finetuned for antithetic languages. But the astonishing portion is that if we prune these models, they volition extremity up with highly akin pruning patterns. For French and Spanish, they person 97 percent overlap," Lai says.

They ran experiments utilizing 10 languages, from Romance languages similar Italian and Spanish to languages that person wholly antithetic alphabets, similar Russian and Mandarin. The results were the same—the finetuned models each had a precise ample overlap.

A elemental solution

Drawing connected that unsocial finding, they developed a elemental method to amended the ratio and boost the show of the neural network, called PARP (Prune, Adjust, and Re-Prune).

In the archetypal step, a pretrained code designation neural web similar Wave2vec 2.0 is pruned by removing unnecessary connections. Then successful the 2nd step, the resulting subnetwork is adjusted for a circumstantial language, and past pruned again. During this 2nd step, connections that had been removed are allowed to turn backmost if they are important for that peculiar language.

Because connections are allowed to turn backmost during the 2nd step, the exemplary lone needs to beryllium finetuned once, alternatively than implicit aggregate iterations, which vastly reduces the magnitude of computing powerfulness required.

Testing the technique

The researchers enactment PARP to the trial against different communal pruning techniques and recovered that it outperformed them each for code recognition. It was particularly effectual erstwhile determination was lone a precise tiny magnitude of transcribed code to bid on.

They besides showed that PARP tin make 1 smaller subnetwork that tin beryllium finetuned for 10 languages astatine once, eliminating the request to prune abstracted subnetworks for each language, which could besides trim the disbursal and clip required to bid these models.

Moving forward, the researchers would similar to use PARP to text-to-speech models and besides spot however their method could amended the ratio of different heavy learning networks.

"There are expanding needs to enactment ample deep-learning models connected borderline devices. Having much businesslike models allows these models to beryllium squeezed onto much primitive systems, similar compartment phones. Speech exertion is precise important for compartment phones, for instance, but having a smaller exemplary does not needfully mean it is computing faster. We request further exertion to bring astir faster computation, truthful determination is inactive a agelong mode to go," Zhang says.

Self-supervised learning (SSL) is changing the tract of speech processing, truthful making SSL models smaller without degrading show is simply a important probe direction, says Hung-yi Lee, subordinate prof successful the Department of Electrical Engineering and the Department of Computer Science and Information Engineering astatine National Taiwan University, who was not progressive successful this research.

"PARP trims the SSL models, and astatine the aforesaid time, amazingly improves the designation accuracy. Moreover, the insubstantial shows determination is simply a subnet successful the SSL model, which is suitable for ASR tasks of galore languages. This find volition stimulate probe connected language/task agnostic web pruning. In different words, SSL models tin beryllium compressed portion maintaining their show connected assorted tasks and languages," helium says.

More information: Cheng-I Jeff Lai et al, PARP: Prune, Adjust and Re-Prune for Self-Supervised Speech Recognition. arXiv:2106.05933v2 [cs.CL], arxiv.org/abs/2106.05933

This communicative is republished courtesy of MIT News (web.mit.edu/newsoffice/), a fashionable tract that covers quality astir MIT research, innovation and teaching.

Citation: Toward code designation for uncommon spoken languages (2021, November 4) retrieved 4 November 2021 from https://techxplore.com/news/2021-11-speech-recognition-uncommon-spoken-languages.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·