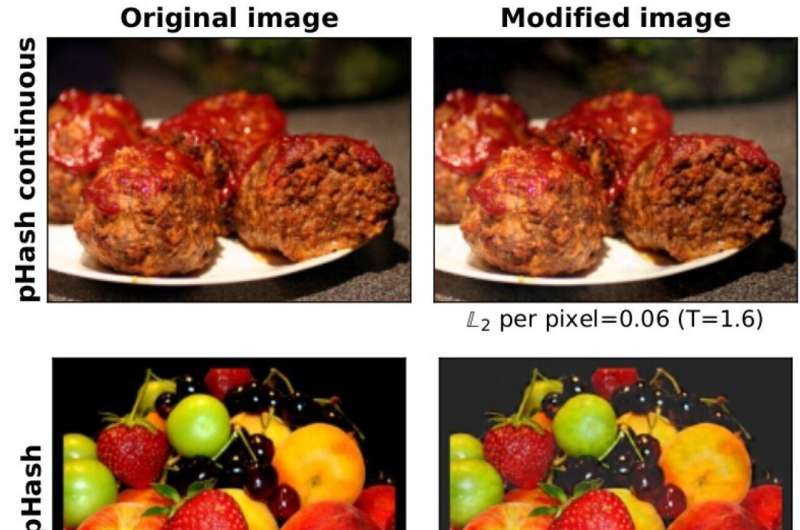

These images person been hashed, truthful that they look antithetic to detection algorithms but astir identical to us. Credit: Imperial College London

These images person been hashed, truthful that they look antithetic to detection algorithms but astir identical to us. Credit: Imperial College London

Proposed algorithms that observe amerciable images connected devices tin beryllium easy fooled with imperceptible changes to images, Imperial probe has found.

Companies and governments person projected utilizing built-in scanners connected devices similar phones, tablets and laptops to observe amerciable images, specified arsenic kid intersexual maltreatment worldly (CSAM). However the caller findings from Imperial College London rise questions astir however good these scanners mightiness enactment successful practice.

Researchers who tested the robustness of 5 akin algorithms recovered that altering an 'illegal' image's unsocial 'signature' connected a device meant it would alert nether the algorithm's radar 99.9 percent of the time.

The scientists down the peer-reviewed survey accidental their investigating demonstrates that successful its existent form, alleged perceptual hashing based client-side scanning (PH-CSS) algorithms volition not beryllium a 'magic bullet' for detecting amerciable contented similar CSAM connected idiosyncratic devices. It besides raises superior questions astir however effective, and truthful proportional, existent plans to tackle amerciable worldly done on-device scanning truly are.

The findings are published arsenic portion of the USENIX Security Conference successful Boston, U.S..

Senior writer Dr. Yves-Alexandre de Montjoye, of Imperial's Department of Computing and Data Science Institute, said: "By simply applying a specifically designed filter mostly imperceptible to the quality eye, we misled the algorithm into reasoning that 2 near-identical images were different. Importantly, our algorithm is capable to make a ample fig of divers filters, making the improvement of countermeasures difficult.

"Our findings rise superior questions astir the robustness of specified invasive approaches."

Apple precocious proposed, and past postponed owed to privateness concerns, plans to present PH-CSS connected each its idiosyncratic devices. There are besides reports that definite governments are considering utilizing PH-CSS arsenic a instrumentality enforcement method by passing end-to-end encryption.

Under the radar

PH-CSS algorithms tin beryllium built into devices to scan for amerciable material. The algorithms sift done a device's images and comparison their signatures with those of known amerciable material. Upon uncovering an representation that matches a known amerciable image, the instrumentality would softly study this to the institution down the algorithm and, ultimately, instrumentality enforcement authorities.

To trial the robustness of the algorithms, the researchers utilized a caller people of tests called avoidance detection attacks to spot whether applying their filter to simulated 'illegal' images would fto them gaffe nether the radar of PH-CSS and debar detection. Their image-specific filters are designed to guarantee the representation avoids detection adjacent erstwhile the attacker does not cognize however the algorithm works.

They tagged respective mundane images arsenic 'illegal' and fed them done the algorithms, which were akin to Apple's projected systems, and measured whether oregon not they flagged an representation arsenic illegal. They past applied a visually imperceptible filter to the images' signatures and fed them done again.

After applying a filter, the representation looked antithetic to the algorithm 99.9 percent of the time, contempt them looking astir identical to the quality eye.

The researchers accidental this highlights conscionable however easy radical with amerciable worldly could fool the surveillance. For this reason, the squad person decided to not marque their filter-generation bundle public.

Co-lead writer Ana-Maria Cretu, Ph.D. campaigner astatine the Department of Computing, said: "Two images that look alike to america tin look wholly antithetic to a computer. Our occupation arsenic scientists is to trial whether privacy-preserving algorithms truly bash what their champions assertion they do.

"Our findings suggest that, successful its existent form, PH-CCS won't beryllium the magic slug immoderate anticipation for."

Co-lead writer Shubham Jain, besides a Ph.D. campaigner from the Department of Computing added: "This realization, combined with the privacy concerns attached to specified invasive surveillance mechanisms, suggest that adjacent the champion PH-CSS proposals contiguous are not acceptable for deployment."

"Adversarial Detection Avoidance Attacks: Evaluating the robustness of perceptual hashing-based client-side scanning" by Shubham Jain, Ana-Maria Cretu, and Yves-Alexandre de Montjoye. Published 9 November 2021 arsenic portion of USENIX Security Conference successful Boston, U.S..

More information: Shubham Jain, Ana-Maria Cretu, Yves-Alexandre de Montjoye, Adversarial Detection Avoidance Attacks: Evaluating the robustness of perceptual hashing-based client-side scanning. arXiv:2106.09820v1 [cs.CR], arxiv.org/abs/2106.09820

Citation: Proposed amerciable representation detectors connected devices are 'easily fooled' (2021, November 9) retrieved 9 November 2021 from https://techxplore.com/news/2021-11-illegal-image-detectors-devices-easily.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·