Credit: The Conversation

Credit: The Conversation

Facebook has been quietly experimenting with reducing the magnitude of governmental contented it puts successful users' quality feeds. The determination is simply a tacit acknowledgment that the mode the company's algorithms enactment can beryllium a problem.

The bosom of the substance is the favoritism betwixt provoking a effect and providing contented radical want. Social media algorithms—the rules their computers travel successful deciding the contented that you see—rely heavy connected people's behaviour to marque these decisions. In particular, they ticker for contented that radical respond to oregon "engage" with by liking, commenting and sharing.

As a computer scientist who studies the ways ample numbers of radical interact utilizing technology, I recognize the logic of utilizing the wisdom of the crowds successful these algorithms. I besides spot important pitfalls successful however the social media companies bash truthful successful practice.

From lions connected the savanna to likes connected Facebook

The conception of the contented of crowds assumes that utilizing signals from others' actions, opinions and preferences arsenic a usher volition pb to dependable decisions. For example, collective predictions are usually much close than idiosyncratic ones. Collective quality is utilized to foretell financial markets, sports, elections and adjacent disease outbreaks.

Throughout millions of years of evolution, these principles person been coded into the human brain successful the signifier of cognitive biases that travel with names similar familiarity, mere-exposure and bandwagon effect. If everyone starts running, you should besides commencement running; possibly idiosyncratic saw a lion coming and moving could prevention your life. You whitethorn not cognize why, but it's wiser to inquire questions later.

Your encephalon picks up clues from the environment—including your peers—and uses simple rules to rapidly construe those signals into decisions: Go with the winner, travel the majority, transcript your neighbor. These rules enactment remarkably good successful emblematic situations due to the fact that they are based connected dependable assumptions. For example, they presume that radical often enactment rationally, it is improbable that galore are wrong, the past predicts the future, and truthful on.

Technology allows radical to entree signals from overmuch larger numbers of different people, astir of whom they bash not know. Artificial quality applications marque dense usage of these popularity oregon "engagement" signals, from selecting hunt motor results to recommending euphony and videos, and from suggesting friends to ranking posts connected quality feeds.

Not everything viral deserves to be

Our probe shows that virtually each web exertion platforms, specified arsenic social media and quality proposal systems, person a beardown popularity bias. When applications are driven by cues similar engagement alternatively than explicit hunt motor queries, popularity bias tin pb to harmful unintended consequences.

Social media similar Facebook, Instagram, Twitter, YouTube and TikTok trust heavy connected AI algorithms to fertile and urge content. These algorithms instrumentality arsenic input what you "like," remark connected and share—in different words, contented you prosecute with. The extremity of the algorithms is to maximize engagement by uncovering retired what radical similar and ranking it astatine the apical of their feeds.

On the aboveground this seems reasonable. If radical similar credible news, adept opinions and amusive videos, these algorithms should place specified high-quality content. But the contented of the crowds makes a cardinal presumption here: that recommending what is fashionable volition assistance high-quality contented "bubble up."

We tested this assumption by studying an algorithm that ranks items utilizing a premix of prime and popularity. We recovered that successful general, popularity bias is much apt to little the wide prime of content. The crushed is that engagement is not a reliable indicator of prime erstwhile fewer radical person been exposed to an item. In these cases, engagement generates a noisy signal, and the algorithm is apt to amplify this archetypal noise. Once the popularity of a low-quality point is ample enough, it volition support getting amplified.

Algorithms aren't the lone happening affected by engagement bias—it tin affect people, too. Evidence shows that accusation is transmitted via "complex contagion," meaning the much times idiosyncratic is exposed to an thought online, the much apt they are to follow and reshare it. When societal media tells radical an point is going viral, their cognitive biases footwear successful and construe into the irresistible impulse to wage attraction to it and stock it.

A primer connected the Facebook algorithm.

Not-so-wise crowds

We precocious ran an experimentation utilizing a quality literacy app called Fakey. It is simply a crippled developed by our lab, which simulates a quality provender similar those of Facebook and Twitter. Players spot a premix of existent articles from fake news, junk science, hyper-partisan and conspiratorial sources, arsenic good arsenic mainstream sources. They get points for sharing oregon liking quality from reliable sources and for flagging low-credibility articles for fact-checking.

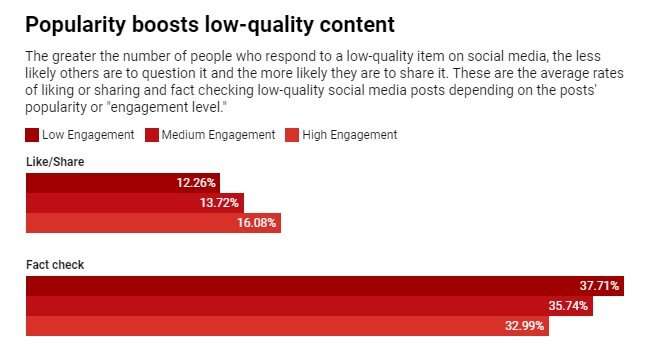

We recovered that players are more apt to similar oregon stock and little apt to flag articles from low-credibility sources erstwhile players tin spot that galore different users person engaged with those articles. Exposure to the engagement metrics frankincense creates a vulnerability.

The contented of the crowds fails due to the fact that it is built connected the mendacious presumption that the assemblage is made up of diverse, autarkic sources. There whitethorn beryllium respective reasons this is not the case.

First, due to the fact that of people's inclination to subordinate with akin people, their online neighborhoods are not precise diverse. The easiness with which a societal media idiosyncratic tin unfriend those with whom they disagree pushes radical into homogeneous communities, often referred to arsenic echo chambers.

Second, due to the fact that galore people's friends are friends of each other, they power each other. A famous experiment demonstrated that knowing what euphony your friends similar affects your ain stated preferences. Your societal tendency to conform distorts your autarkic judgment.

Third, popularity signals tin beryllium gamed. Over the years, hunt engines person developed blase techniques to antagonistic alleged "link farms" and different schemes to manipulate hunt algorithms. Social media platforms, connected the different hand, are conscionable opening to larn astir their ain vulnerabilities.

People aiming to manipulate the accusation marketplace person created fake accounts, similar trolls and social bots, and organized fake networks. They person flooded the network to make the quality that a conspiracy theory oregon a political candidate is popular, tricking some level algorithms and people's cognitive biases astatine once. They person adjacent altered the operation of societal networks to make illusions astir bulk opinions.

Dialing down engagement

What to do? Technology platforms are presently connected the defensive. They are becoming much aggressive during elections successful taking down fake accounts and harmful misinformation. But these efforts tin beryllium akin to a crippled of whack-a-mole.

A different, preventive attack would beryllium to adhd friction. In different words, to dilatory down the process of spreading information. High-frequency behaviors specified arsenic automated liking and sharing could beryllium inhibited by CAPTCHA tests oregon fees. This would not lone alteration opportunities for manipulation, but with little accusation radical would beryllium capable to wage much attraction to what they see. It would permission little country for engagement bias to impact people's decisions.

It would besides assistance if societal media companies adjusted their algorithms to trust little connected engagement to find the contented they service you.

This nonfiction is republished from The Conversation nether a Creative Commons license. Read the original article.![]()

Citation: How 'engagement' makes you susceptible to manipulation and misinformation connected societal media (2021, September 13) retrieved 13 September 2021 from https://techxplore.com/news/2021-09-engagement-vulnerable-misinformation-social-media.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·