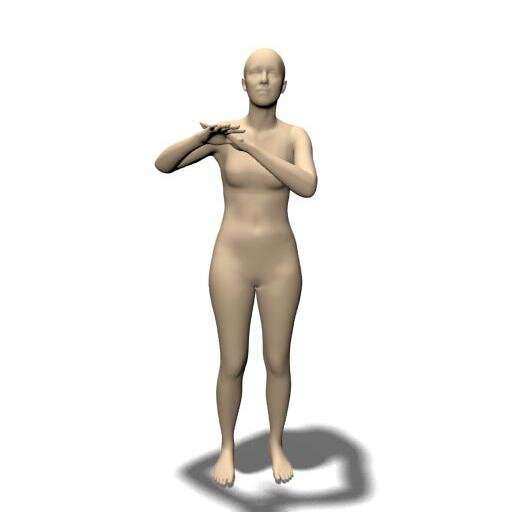

RGB Image of a customer. Credit: Tiwari & Bhowmick.

RGB Image of a customer. Credit: Tiwari & Bhowmick.

In caller years, immoderate machine scientists person been exploring the imaginable of deep-learning techniques for virtually dressing 3D integer versions of humans. Such techniques could person galore invaluable applications, peculiarly for online shopping, gaming and 3D contented generation.

Two researchers astatine TCS Research, India person precocious created a heavy learning method that tin foretell however items of covering volition accommodate to a fixed body signifier and frankincense however it volition look connected circumstantial people. This technique, presented astatine the ICCV Workshop, has been recovered to outperform different existing virtual body covering methods.

"Online buying of apparel allows consumers to entree and acquisition a wide scope of products from the comfortableness of their home, without going to carnal stores," Brojeshwar Bhowmick, 1 of the researchers who carried retired the study, told TechXplore. "However, it has 1 large limitation: It does not alteration buyers to effort apparel physically, which results successful a precocious return/exchange complaint owed to apparel fitting issues. The conception of virtual try-on helps to resoluteness that limitation."

Virtual try-on tools let radical purchasing apparel online to get an thought of however a garment would acceptable and look connected them, by visualizing it connected a 3D avatar (i.e., a integer mentation of themselves). The imaginable purchaser tin infer however the point he/she is reasoning of purchasing fits by looking astatine the folds and wrinkles of it successful assorted positions oregon from antithetic angles, arsenic good arsenic the spread betwixt the avatar's assemblage and the worn garment successful the rendered image/video.

Youtube RGB and Draped Image with some T-shirt and pants. Credit: Tiwari & Bhowmick.

It allows buyers to visualize immoderate garment connected a 3D avatar of them, arsenic if they are wearing it. Two important factors that a purchaser considers portion deciding to acquisition a peculiar garment are acceptable and appearance. In a virtual try-on setup, a idiosyncratic tin infer however a peculiar garment fits by looking astatine folds and wrinkles successful assorted poses and the spread betwixt the assemblage and the garment successful the rendered representation oregon video.

"Previous enactment successful this area, specified arsenic the improvement of the method TailorNet, doesn't instrumentality the underlying quality assemblage measurements into account; thus, its ocular predictions are not precise accurate, fitting-wise," Bhowmick said. "In summation to that, owed its design, the representation footprint of TailorNet is huge, which restricts its usage successful real-time applications with little computational power."

The main nonsubjective of the caller survey by Bhowmick and his colleagues was to make a lightweight strategy that considers a human's assemblage measurements and drapes 3D garments implicit an avatar that matches those assemblage measurements. Ideally, they wanted this strategy to necessitate debased representation and computational power, truthful that it could beryllium tally successful real-time, for lawsuit connected online covering websites.

The estimated 3D assemblage of the aforesaid lawsuit successful the representation above, derived from the RGB image. Credit: Tiwari & Bhowmick.

The estimated 3D assemblage of the aforesaid lawsuit successful the representation above, derived from the RGB image. Credit: Tiwari & Bhowmick.

"DeepDraper is simply a heavy learning-based garment draping strategy that allows customers to virtually effort garments from a integer wardrobe onto their ain bodies successful 3D," Bhowmick explained. "Essentially, it takes an representation oregon a abbreviated video clip of the customer, and a garment from a integer wardrobe provided by the seller arsenic inputs."

Initially, DeepDraper analyzes images oregon videos of a idiosyncratic to estimation his/her 3D assemblage shape, airs and assemblage measurements. Subsequently, it feeds its estimations to a draping neural web that predicts however a garment would look connected the user's body, by applying it onto a virtual avatar.

The researchers evaluated their method successful a bid of tests and recovered that it outperformed different state-of-the-art approaches, arsenic it predicted however a garment would acceptable users amended and much realistically. In addition, their strategy was capable to drape garments of immoderate size connected quality bodies of each shapes and with assorted characteristics.

"Another important diagnostic of DeepDraper is that it is precise accelerated and tin beryllium supported by debased extremity devices specified arsenic mobile phones oregon tablets," Bhowmick said. "More precisely, DeepDraper is astir 23 times faster and astir 10 times smaller successful representation footprint compared to its adjacent rival Tailornet."

In the future, the virtual garment-draping method created by this squad of researchers could let covering and manner companies to amended their users' acquisition with online shopping. By allowing imaginable buyers to get a amended thought of however apparel would look connected them earlier purchasing them, it could besides trim requests for refunds oregon merchandise exchanges. In addition, DeepDraper could beryllium utilized by crippled developers oregon 3D media contented creators to formal characters much efficiently and realistically.

"In our adjacent studies, we program to widen DeepDraper to virtually effort connected different challenging, loose, and multilayered garments, specified arsenic dresses, gowns, t-shirts with jackets etc. Currently, DeepDraper drapes the garment connected a static quality body, but we yet program to drape and animate the garment consistently arsenic humans move."

More information: DeepDraper: Fast and close 3D garment draping implicit a 3D quality body. The Computer Vision Foundation(2021). PDF

© 2021 Science X Network

Citation: DeepDraper: A method that predicts however apparel would look connected antithetic radical (2021, October 26) retrieved 26 October 2021 from https://techxplore.com/news/2021-10-deepdraper-technique-people.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·