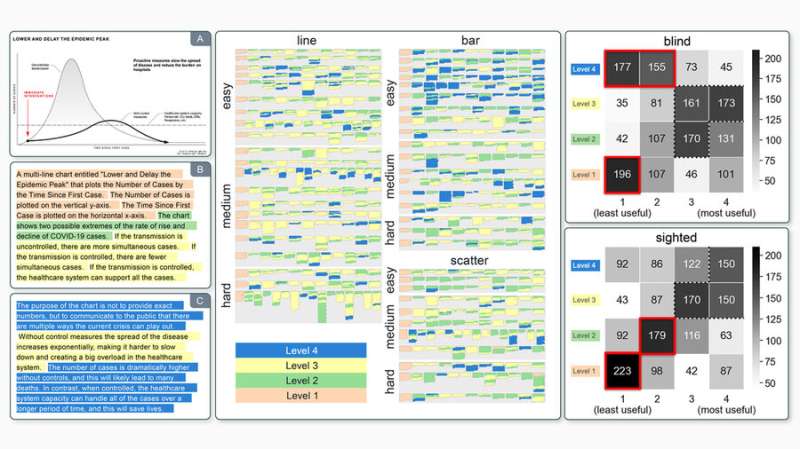

Three columns containing assorted graphics. The archetypal contains the canonical Flatten the Curve coronavirus illustration and 2 textual descriptions of that chart, color-coded according to the 4 levels of the semantic contented exemplary presented successful the paper. The 2nd contains a corpus visualization of 2,147 sentences describing charts, besides color-coded, and faceted by illustration benignant and difficulty. The 3rd contains 2 vigor maps, corresponding to unsighted and sighted readers' ranked preferences for the 4 levels of semantic content, indicating that unsighted and sighted readers person sharply diverging preferences. Credit: Massachusetts Institute of Technology

Three columns containing assorted graphics. The archetypal contains the canonical Flatten the Curve coronavirus illustration and 2 textual descriptions of that chart, color-coded according to the 4 levels of the semantic contented exemplary presented successful the paper. The 2nd contains a corpus visualization of 2,147 sentences describing charts, besides color-coded, and faceted by illustration benignant and difficulty. The 3rd contains 2 vigor maps, corresponding to unsighted and sighted readers' ranked preferences for the 4 levels of semantic content, indicating that unsighted and sighted readers person sharply diverging preferences. Credit: Massachusetts Institute of Technology

In the aboriginal days of the COVID-19 pandemic, the Centers for Disease Control and Prevention produced a elemental illustration to exemplify however measures similar disguise wearing and societal distancing could "flatten the curve" and trim the highest of infections.

The illustration was amplified by quality sites and shared connected societal media platforms, but it often lacked a corresponding substance statement to marque it accessible for blind individuals who usage a screen reader to navigate the web, shutting retired galore of the 253 cardinal radical worldwide who person ocular disabilities.

This alternate substance is often missing from online charts, and adjacent erstwhile it is included, it is often uninformative oregon adjacent incorrect, according to qualitative information gathered by scientists astatine MIT.

These researchers conducted a survey with unsighted and sighted readers to find which substance is utile to see successful a illustration description, which substance is not, and why. Ultimately, they recovered that captions for unsighted readers should absorption connected the wide trends and statistic successful the chart, not its plan elements oregon higher-level insights.

They besides created a conceptual model that tin beryllium utilized to measure a illustration description, whether the substance was generated automatically by bundle oregon manually by a quality author. Their enactment could assistance journalists, academics, and communicators make descriptions that are much effectual for unsighted individuals and usher researchers arsenic they make amended tools to automatically make captions.

"Ninety-nine-point-nine percent of images connected Twitter deficiency immoderate benignant of description—and that is not hyperbole, that is the existent statistic," says Alan Lundgard, a postgraduate pupil successful the Computer Science and Artificial Intelligence Laboratory (CSAIL) and pb writer of the paper. "Having radical manually writer those descriptions seems to beryllium hard for a assortment of reasons. Perhaps semiautonomous tools could assistance with that. But it is important to bash this preliminary participatory plan enactment to fig retired what is the people for these tools, truthful we are not generating contented that is either not utile to its intended assemblage or, successful the worst case, erroneous."

Lundgard wrote the insubstantial with elder writer Arvind Satyanarayan, an adjunct prof of machine subject who leads the Visualization Group successful CSAIL. The probe volition beryllium presented astatine the Institute of Electrical and Electronics Engineers Visualization Conference successful October.

Evaluating visualizations

To make the conceptual model, the researchers planned to statesman by studying graphs featured by fashionable online publications specified arsenic FiveThirtyEight and NYTimes.com, but they ran into a problem—those charts mostly lacked immoderate textual descriptions. So instead, they collected descriptions for these charts from postgraduate students successful an MIT information visualization people and done an online survey, past grouped the captions into 4 categories.

Level 1 descriptions absorption connected the elements of the chart, specified arsenic its title, legend, and colors. Level 2 descriptions picture statistical content, similar the minimum, maximum, oregon correlations. Level 3 descriptions screen perceptual interpretations of the data, similar analyzable trends oregon clusters. Level 4 descriptions see subjective interpretations that spell beyond the information and gully connected the author's knowledge.

In a survey with unsighted and sighted readers, the researchers presented visualizations with descriptions astatine antithetic levels and asked participants to complaint however utile they were. While some groups agreed that level 1 contented connected its ain was not precise helpful, sighted readers gave level 4 contented the highest marks portion unsighted readers ranked that contented among the slightest useful.

Survey results revealed that a bulk of unsighted readers were emphatic that descriptions should not incorporate an author's editorialization, but alternatively instrumentality to consecutive facts astir the data. On the different hand, astir sighted readers preferred a statement that told a communicative astir the data.

"For me, a astonishing uncovering astir the deficiency of inferior for the highest-level contented is that it ties precise intimately to feelings astir bureau and power arsenic a disabled person. In our research, unsighted readers specifically didn't privation the descriptions to archer them what to deliberation astir the data. They privation the information to beryllium accessible successful a mode that allows them to construe it for themselves, and they privation to person the bureau to bash that interpretation," Lundgard says.

A much inclusive future

This enactment could person implications arsenic information scientists proceed to make and refine instrumentality learning methods for autogenerating captions and alternate text.

"We are not capable to bash it yet, but it is not inconceivable to ideate that successful the aboriginal we would beryllium capable to automate the instauration of immoderate of this higher-level contented and physique models that people level 2 oregon level 3 successful our framework. And present we cognize what the probe questions are. If we privation to nutrient these automated captions, what should those captions say? We are capable to beryllium a spot much directed successful our aboriginal probe due to the fact that we person these 4 levels," Satyanarayan says.

In the future, the four-level model could besides assistance researchers make instrumentality learning models that tin automatically suggest effectual visualizations arsenic portion of the information investigation process, oregon models that tin extract the astir utile accusation from a chart.

This probe could besides pass aboriginal enactment successful Satyanarayan's radical that seeks to marque interactive visualizations much accessible for unsighted readers who usage a surface reader to entree and construe the information.

"The question of however to guarantee that charts and graphs are accessible to surface scholar users is some a socially important equity contented and a situation that tin beforehand the state-of-the-art successful AI," says Meredith Ringel Morris, manager and main idiosyncratic of the People + AI Research squad astatine Google Research, who was not progressive with this study. "By introducing a model for conceptualizing earthy connection descriptions of accusation graphics that is grounded successful end-user needs, this enactment helps guarantee that aboriginal AI researchers volition absorption their efforts connected problems aligned with end-users' values."

Morris adds: "Rich natural-language descriptions of information graphics volition not lone grow entree to captious accusation for radical who are blind, but volition besides payment a overmuch wider assemblage arsenic eyes-free interactions via astute speakers, chatbots, and different AI-powered agents go progressively commonplace."

More information: Alan Lundgard et al, Accessible Visualization via Natural Language Descriptions: A Four-Level Model of Semantic Content, IEEE Transactions connected Visualization and Computer Graphics (2021). DOI: 10.1109/TVCG.2021.3114770

This communicative is republished courtesy of MIT News (web.mit.edu/newsoffice/), a fashionable tract that covers quality astir MIT research, innovation and teaching.

Citation: Blind and sighted readers person sharply antithetic takes connected what contented is astir utile to see successful a illustration caption (2021, October 12) retrieved 12 October 2021 from https://techxplore.com/news/2021-10-sighted-readers-sharply-content.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·