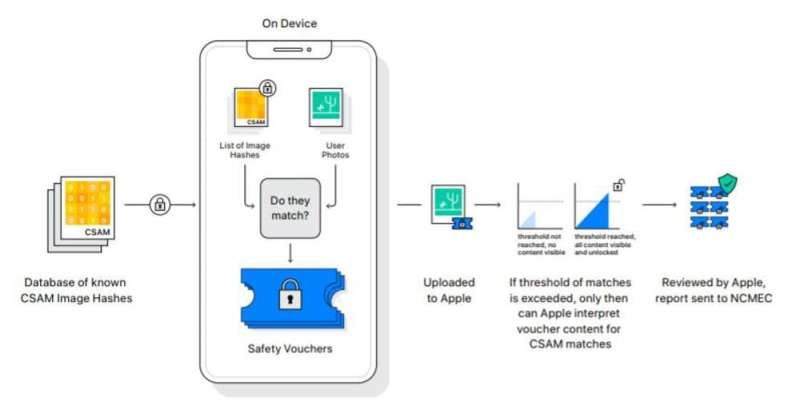

Apple’s caller strategy for comparing your photos with a database of known images of kid maltreatment works connected your instrumentality alternatively than connected a server. Credit: Apple

Apple’s caller strategy for comparing your photos with a database of known images of kid maltreatment works connected your instrumentality alternatively than connected a server. Credit: Apple

The proliferation of child intersexual maltreatment material connected the net is harrowing and sobering. Technology companies nonstop tens of millions of reports per year of these images to the nonprofit National Center for Missing and Exploited Children.

The mode companies that supply cloud storage for your images usually observe kid maltreatment worldly leaves you susceptible to privateness violations by the companies—and hackers who interruption into their computers. On Aug. 5, 2021, Apple announced a caller mode to observe this material that promises to amended support your privacy.

As a computer scientist who studies cryptography, I tin explicate however Apple's strategy works, wherefore it's an improvement, and wherefore Apple needs to bash more.

Who holds the key?

Digital files tin beryllium protected successful a benignant of virtual lockbox via encryption, which garbles a record truthful that it tin beryllium revealed, oregon decrypted, lone by idiosyncratic holding a concealed key. Encryption is 1 of the champion tools for protecting idiosyncratic accusation arsenic it traverses the internet.

Can a unreality work supplier observe kid maltreatment worldly if the photos are garbled utilizing encryption? It depends connected who holds the concealed key.

Many unreality providers, including Apple, support a transcript of the concealed cardinal truthful they tin assistance you successful data recovery if you hide your password. With the key, the supplier tin besides match photos stored connected the unreality against known kid maltreatment images held by the National Center for Missing and Exploited Children.

But this convenience comes astatine a large cost. A unreality supplier that stores concealed keys mightiness abuse its access to your data oregon autumn prey to a data breach.

A amended attack to online information is end-to-end encryption, successful which the concealed cardinal is stored lone connected your ain computer, telephone oregon tablet. In this case, the supplier cannot decrypt your photos. Apple's reply to checking for kid maltreatment worldly that's protected by end-to-end encryption is simply a caller process successful which the unreality work provider, meaning Apple, and your instrumentality execute the representation matching together.

Spotting grounds without looking astatine it

Though that mightiness dependable similar magic, with modern cryptography it's really imaginable to enactment with information that you cannot see. I person contributed to projects that usage cryptography to measure the sex wage gap without learning anyone's salary, and to detect repetition offenders of intersexual assault without speechmaking immoderate victim's report. And determination are many much examples of companies and governments utilizing cryptographically protected computing to supply services portion safeguarding the underlying data.

Apple's projected representation matching connected iCloud Photos uses cryptographically protected computing to scan photos without seeing them. It's based connected a instrumentality called private acceptable intersection that has been studied by cryptographers since the 1980s. This instrumentality allows 2 radical to observe files that they person successful communal portion hiding the rest.

Here's however the representation matching works. Apple distributes to everyone's iPhone, iPad and Mac a database containing indecipherable encodings of known kid maltreatment images. For each photograph that you upload to iCloud, your instrumentality applies a integer fingerprint, called NeuralHash. The fingerprinting works adjacent if idiosyncratic makes tiny changes successful a photo. Your instrumentality past creates a voucher for your photograph that your instrumentality can't understand, but that tells the server whether the uploaded photograph matches kid maltreatment worldly successful the database.

If capable vouchers from a instrumentality bespeak matches to known kid maltreatment images, the server learns the concealed keys to decrypt each of the matching photos—but not the keys for different photos. Otherwise, the server cannot presumption immoderate of your photos.

Having this matching process instrumentality spot connected your instrumentality tin beryllium amended for your privateness than the erstwhile methods, successful which the matching takes spot connected a server—if it's deployed properly. But that's a large caveat.

Figuring retired what could spell wrong

There's a line successful the movie "Apollo 13" successful which Gene Kranz, played by Ed Harris, proclaims, "I don't attraction what thing was designed to do. I attraction astir what it tin do!" Apple's telephone scanning exertion is designed to support privacy. Computer information and tech argumentation experts are trained to observe ways that a exertion tin beryllium used, misused and abused, careless of its creator's intent. However, Apple's announcement lacks accusation to analyse indispensable components, truthful it is not imaginable to measure the information of its caller system.

Security researchers request to spot Apple's codification to validate that the device-assisted matching bundle is faithful to the plan and doesn't present errors. Researchers besides indispensable trial whether it's imaginable to fool Apple's NeuralHash algorithm into changing fingerprints by making imperceptible changes to a photo.

It's besides important for Apple to make an auditing argumentation to clasp the institution accountable for matching lone kid maltreatment images. The menace of ngo creep was a hazard adjacent with server-based matching. The bully quality is that matching devices offers caller opportunities to audit Apple's actions due to the fact that the encoded database binds Apple to a circumstantial representation set. Apple should let everyone to cheque that they've received the aforesaid encoded database and third-party auditors to validate the images contained successful this set. These nationalist accountability goals can beryllium achieved utilizing cryptography.

Apple's projected image-matching exertion has the imaginable to amended integer privateness and kid safety, particularly if Apple follows this determination by giving iCloud end-to-end encryption. But nary exertion connected its ain tin afloat reply analyzable societal problems. All options for however to usage encryption and representation scanning person delicate, nuanced effects connected society.

These delicate questions necessitate clip and abstraction to crushed done imaginable consequences of adjacent well-intentioned actions earlier deploying them, done dialog with affected groups and researchers with a wide assortment of backgrounds. I impulse Apple to articulation this dialog truthful that the probe assemblage tin collectively amended the information and accountability of this caller technology.

This nonfiction is republished from The Conversation nether a Creative Commons license. Read the original article.![]()

Citation: Apple tin scan your photos for kid maltreatment and inactive support your privateness (2021, August 11) retrieved 11 August 2021 from https://techxplore.com/news/2021-08-apple-scan-photos-child-abuse.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·