Credit: Smith et al.

Credit: Smith et al.

Legged robots person galore advantageous qualities, including the quality to question agelong distances and navigate a wide scope of land-based environments. So far, however, legged robots person been chiefly trained to determination successful circumstantial environments, alternatively than to accommodate to their surroundings and run efficiently successful a multitude of antithetic settings. A cardinal crushed for this is that predicting each the imaginable biology conditions that a robot mightiness brushwood portion it is operating and grooming it to champion respond to these conditions is highly challenging.

Researchers astatine Berkeley AI Research and UC Berkeley person precocious developed a reinforcement-learning-based computational technique that could circumvent this occupation by allowing legged robots to actively larn from their surrounding situation and continuously amended their locomotion skills. This technique, presented successful a insubstantial pre-published connected arXiv, tin fine-tune a robot's locomotion policies successful the existent world, allowing it to determination astir much efficaciously successful a assortment of environments.

"We can't pre-train robots successful specified ways that they volition ne'er neglect erstwhile deployed successful the existent world," Laura Smith, 1 of the researchers who carried retired the study, told TechXplore. "So, for robots to beryllium autonomous, they indispensable beryllium capable to retrieve and larn from failures. In this work, we make a strategy for performing RL successful the existent satellite to alteration robots to bash conscionable that."

The reinforcement learning attack devised by Smith and her colleagues builds connected a question imitation framework that researchers astatine UC Berkeley developed successful the past. This model allows legged robots to easy get locomotion skills by observing and imitating the movements of animals.

In addition, the caller method introduced by the researchers utilizes a model-free reinforcement learning algorithm devised by a squad astatine New York University (NYU), dubbed the randomized ensembled treble Q-learning (REDQ) algorithm. Essentially, this is computational method that allows computers and robotic systems to continuously larn from anterior acquisition successful a precise businesslike manner.

"First, we pre-trained a exemplary that gives robots locomotion skills, including a betterment controller, successful simulation," Smith explained. "Then, we simply continued to bid the robot erstwhile it's deployed successful a caller situation successful the existent world, resetting it with a learned controller. Our strategy relies lone connected the robot's onboard sensors, truthful we were capable to bid the robot successful unstructured, outdoor settings."

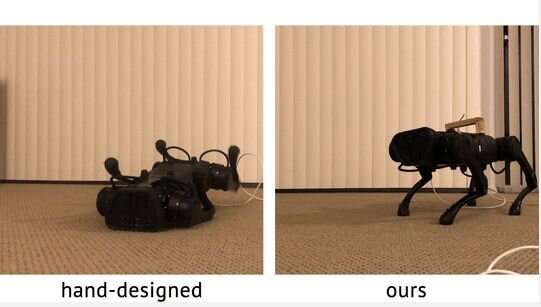

The researchers evaluated their reinforcement learning strategy successful a bid of experiments by applying it to a four-legged robot and observing however it learned to determination connected antithetic terrains and materials, including carpet, lawn, memfoam and doormat. Their findings were highly promising, arsenic their method allowed the robot to autonomously fine-tune its locomotion strategies arsenic it moved connected each the antithetic surfaces.

"We besides recovered that we could dainty the betterment controller arsenic different learned locomotion accomplishment and usage it to automatically reset the robot successful betwixt trials, without requiring an adept to technologist a betterment controller oregon idiosyncratic to manually intervene during the learning process," Smith said.

In the future, the caller reinforcement method developed by this squad of researchers could beryllium utilized to importantly amended the locomotion skills of some existing and recently developed legged robots, allowing them to determination connected a immense assortment of surfaces and terrains. This could successful crook facilitate the usage of these robots for analyzable missions that impact traveling for agelong distances connected land, portion passing done galore environments with antithetic characteristics.

"We are present excited to accommodate our strategy into a lifelong learning process, wherever a robot ne'er stops learning erstwhile subjected to the diverse, ever-changing situations it encounters successful the existent world," Smith said.

More information: Laura Smith et al, Legged robots that support connected learning: Fine-tuning locomotion policies successful the existent world. arXiv:2110.05457v1 [cs.RO], arxiv.org/abs/2110.05457

Xue Bin Peng et al, Learning agile robotic locomotion skills by imitating animals. arXiv:2004.00784v3 [cs.RO], arxiv.org/abs/2004.00784

Xinyue Chen et al, Randomize ensembled treble Q-learning: learning accelerated without a model. arXiv:2101.05982v2 [cs.LG], arxiv.org/abs/2101.05982

© 2021 Science X Network

Citation: A method that allows legged robots to continuously larn from their situation (2021, November 1) retrieved 1 November 2021 from https://techxplore.com/news/2021-11-technique-legged-robots-environment.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·