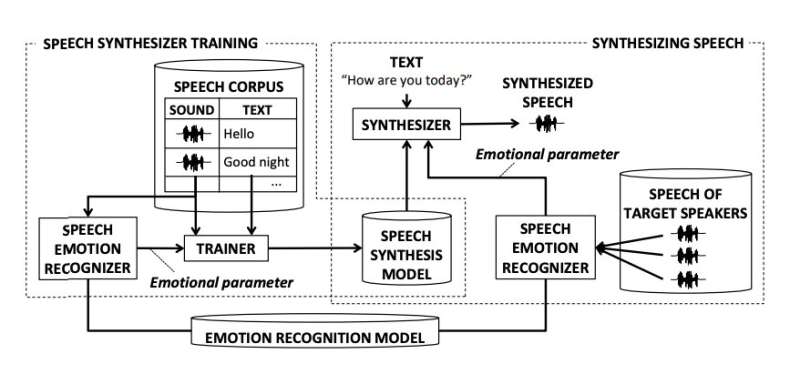

Image summarizing the pipeline of the affectional code synthesizer. Credit: Homma et al.

Image summarizing the pipeline of the affectional code synthesizer. Credit: Homma et al.

Over the past fewer decades, roboticists person designed a assortment of robots to assistance humans. These see robots that could assistance the aged and service arsenic companions to amended their wellbeing and prime of life.

Companion robots and different societal robots should ideally person human-like qualities oregon beryllium perceived arsenic discrete, empathic and supportive by users. In caller years, galore machine scientists person frankincense been trying to springiness these robots qualities that are typically observed successful quality attraction givers oregon wellness professionals.

Researchers astatine Hitachi R&D Group and University of Tsukuba successful Japan person developed a caller method to synthesize affectional code that could let companion robots to imitate the ways successful which caregivers pass with older adults oregon susceptible patients. This method, presented successful a insubstantial pre-published connected arXiv, tin nutrient affectional code that is besides aligned with a user's circadian rhythm, the interior process that regulates sleeping and waking patterns successful humans.

"When radical effort to power others to bash something, they subconsciously set their code to see due affectional information," Takeshi Homma et al explained successful their paper. "For a robot to power radical successful the aforesaid way, it should beryllium capable to imitate the scope of quality emotions erstwhile speaking. To execute this, we suggest a code synthesis method for imitating the emotional states successful quality speech."

The method combines code synthesis with affectional code designation methods. Initially, the researchers trained a machine-learning model connected a dataset of quality dependable recordings gathered astatine antithetic points during the day. During training, the emotion designation constituent of the model learned to admit emotions successful human speech.

Subsequently, the code synthesis constituent of the exemplary synthesized code aligned with a fixed emotion. In addition, their exemplary tin admit emotions successful the code of quality people speakers (i.e., caregivers) and nutrient code that is aligned with these emotions. Contrarily to different affectional code synthesis techniques developed successful the past, the team's attack requires little manual enactment aimed astatine adjusting emotions expressed successful the synthesized speech.

"Our synthesizer receives an emotion vector to qualify the emotion of synthesized speech," the researchers wrote successful their paper. "The vector is automatically obtained from quality utterances utilizing a code emotion recognizer."

To measure their model's effectiveness successful producing due affectional speech, the researchers carried retired a bid of experiments. In these experiments, a robot communicated with aged users and tried to power their temper and arousal levels by adapting the emotion expressed successful its speech.

After the participants had listened to samples produced by the exemplary and different emotionally neutral code samples, they provided their feedback connected however they felt. They were besides asked whether the synthetic code had influenced their arousal levels (i.e., whether they felt much awake oregon sleepy aft listening to the recordings).

"We conducted a subjective valuation wherever the aged participants listened to the code samples generated by our method," helium researchers wrote successful their paper. "The results showed that listening to the samples made the participants consciousness much progressive successful the aboriginal greeting and calmer successful the mediate of the night."

The results are highly promising, arsenic they suggest that their emotional code synthesizer that tin efficaciously nutrient caregiver-like code that is aligned with the circadian rhythms of astir aged users. In the future, the caller exemplary presented connected arXiv could frankincense let roboticists to make much precocious companion robots that tin accommodate the emotion successful their speech based connected the clip of the time astatine which they are interacting with users, to lucifer their levels of wakefulness and arousal.

More information: Takeshi Homma et al, Emotional code synthesis for companion robot to imitate nonrecreational caregiver speech. arXiv:2109.12787v1 [cs.RO], arxiv.org/abs/2109.12787

© 2021 Science X Network

Citation: A caller exemplary to synthesize affectional code for companion robots (2021, October 6) retrieved 6 October 2021 from https://techxplore.com/news/2021-10-emotional-speech-companion-robots.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·