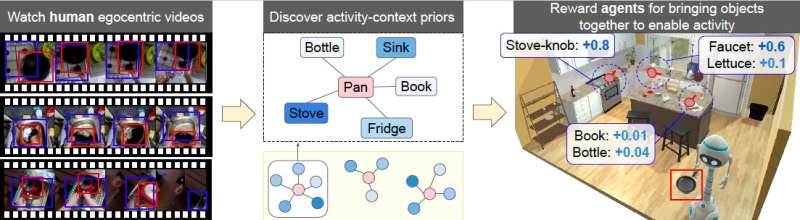

The main thought down the researchers’ paper. Left and mediate panel: The squad discovered activity-contexts for objects straight from egocentric video of quality activity. A fixed object’s activity-context goes beyond “what objects are recovered together” to seizure the likelihood that each different entity successful the situation participates successful activities involving it (i.e., “what objects unneurotic alteration action"). Right panel: The team’s attack guides agents to bring compatible objects—objects with precocious likelihood—together to alteration activities. For example, bringing a cookware to the descend increases the worth of faucet interactions, but bringing it to the array has small effect connected interactions with a book. Credit: Nagarajan & Grauman.

The main thought down the researchers’ paper. Left and mediate panel: The squad discovered activity-contexts for objects straight from egocentric video of quality activity. A fixed object’s activity-context goes beyond “what objects are recovered together” to seizure the likelihood that each different entity successful the situation participates successful activities involving it (i.e., “what objects unneurotic alteration action"). Right panel: The team’s attack guides agents to bring compatible objects—objects with precocious likelihood—together to alteration activities. For example, bringing a cookware to the descend increases the worth of faucet interactions, but bringing it to the array has small effect connected interactions with a book. Credit: Nagarajan & Grauman.

Over the past decennary oregon so, galore roboticists and machine scientists person been trying to make robots that tin implicit tasks successful spaces populated by humans; for instance, helping users to cook, cleanable and tidy up. To tackle household chores and different manual tasks, robots should beryllium capable to lick analyzable readying tasks that impact navigating environments and interacting with objects pursuing circumstantial sequences.

While immoderate techniques for solving these analyzable readying tasks person achieved promising results, astir of them are not afloat equipped to tackle them. As a result, robots cannot yet implicit these tasks arsenic good arsenic human agents.

Researchers astatine UT Austin and Facebook AI Research person precocious developed a caller model that could signifier the behaviour of embodied agents much effectively, utilizing ego-centric videos of humans completing mundane tasks. Their paper, pre-published connected arXiv and acceptable to beryllium presented astatine the Neural Information Processing Systems (NeurIPS) Conference successful December, introduces a much businesslike attack for grooming robots to implicit household chores and different interaction-heavy tasks.

"The overreaching extremity of this task was to physique embodied robotic agents that tin larn by watching radical interact with their surroundings," Tushar Nagarajan, 1 of the researchers who carried retired the study, told TechXplore. "Reinforcement learning (RL) approaches necessitate millions of attempts to larn intelligent behaviour arsenic agents statesman by randomly attempting actions, portion imitation learning (IL) approaches necessitate experts to power and show perfect cause behavior, which is costly to cod and requires other hardware."

In opposition with robotic systems, erstwhile entering a caller environment, humans tin effortlessly implicit tasks that impact antithetic objects. Nagarajan and his workfellow Kristen Grauman frankincense acceptable retired to analyse whether embodied agents could larn to implicit tasks successful akin environments simply by observing however humans behave.

Rather than grooming agents utilizing video demonstrations labeled by humans, which are often costly to collect, the researchers wanted to leverage egocentric (first-person) video footage showing radical performing everyday activities, specified arsenic cooking a repast oregon washing dishes. These videos are easier to cod and much readily accessible than annotated demonstrations.

"Our enactment is the archetypal to usage free-form human-generated video captured successful the existent satellite to larn priors for object interactions," Nagarajan said. "Our attack converts egocentric video of humans interacting with their surroundings into 'activity-context' priors, which seizure what objects, erstwhile brought together, alteration activities. For example, watching humans bash the dishes suggests that utensils, crockery soap and a sponge are bully objects to person earlier turning connected the faucet astatine the sink."

To get these 'priors' (e.g., utile accusation astir what objects to stitchery earlier completing a task), the exemplary created by Nagarajan and Grauman accumulates statistic astir pairs of objects that humans thin to usage during circumstantial activities. Their exemplary straight detected these objects successful ego-centric videos from the ample dataset utilized by the researchers.

Subsequently, the exemplary encoded the priors it acquired arsenic a reward successful a reinforcement learning framework. Essentially, this means that an cause is rewarded based connected what objects it selected for completing a fixed task.

"For example, turning-on the faucet is fixed a precocious reward erstwhile a cookware is brought adjacent the descend (and a debased reward if, say, a publication is brought adjacent it)," Nagarajan explained. "As a consequence, an cause indispensable intelligently bring the close acceptable of objects to the close locations earlier attempting interactions with objects, successful bid to maximize their reward. This helps them scope states that pb to activities, which speeds up learning."

Previous studies person tried to accelerate robot argumentation learning utilizing akin reward functions. However, typically these are exploration rewards that promote agents to research caller locations oregon execute caller interactions, without specifically considering the quality tasks they are learning to complete.

"Our formulation improves connected these erstwhile approaches by aligning the rewards with quality activities, helping agents research much applicable entity interactions," Nagarajan said. "Our enactment is besides unsocial successful that it learns priors astir entity interactions from free-form video, alternatively than video tied to circumstantial goals (as successful behaviour cloning). The effect is simply a general-purpose auxiliary reward to promote businesslike RL."

In opposition with priors considered by antecedently developed approaches, the priors considered by the researchers' exemplary besides seizure however objects are related successful the discourse of actions that the robot is learning to perform, alternatively than simply their carnal co-occurrence (e.g., spoons tin beryllium recovered adjacent knives) oregon semantic similarity (e.g., potatoes and tomatoes are akin objects).

The researchers evaluated their exemplary utilizing a dataset of ego-centric videos showing humans arsenic they implicit mundane chores and tasks successful the kitchen. Their results were promising, suggesting that their exemplary could beryllium utilized to bid household robots much efficaciously than different antecedently developed techniques.

"Our enactment is the archetypal to show that passive video of humans performing regular activities tin beryllium utilized to larn embodied enactment policies," Nagarajan said. "This is simply a important achievement, arsenic egocentric video is readily disposable successful ample amounts from caller datasets. Our enactment is simply a archetypal measurement towards enabling applications that tin larn astir however humans execute activities (without the request for costly demonstrations) and past connection assistance successful the home-robotics setting."

In the future, the caller model developed by this squad of researchers could beryllium utilized to bid a assortment of carnal robots to implicit a assortment of elemental mundane tasks. In addition, it could beryllium utilized to bid and augmented world (AR) assistants, which could, for instance, observe however a quality cooks a circumstantial crockery and past thatch caller users to hole it.

"Our probe is an important measurement towards learning by watching humans, arsenic it captures simple, yet almighty priors astir objects progressive successful activities," Nagarajan added. "However, determination are different meaningful things to larn specified as: What parts of the situation enactment activities (scene affordances)? How should objects beryllium manipulated oregon grasped to usage them? Are determination important sequences of actions (routines) that tin beryllium learned and leveraged by embodied agents? Finally, an important aboriginal probe absorption to prosecute is however to instrumentality policies learned successful simulated environments and deploy them onto mobile robot platforms oregon AR glasses, successful bid to physique agents that tin cooperate with humans successful the existent world."

More information: Tushar Nagarajan, Kristen Grauman, Shaping embodied cause behaviour with activity-context priors from egocentric video. arXiv:2110.07692v1 [cs.CV], arxiv.org/abs/2110.07692

© 2021 Science X Network

Citation: A exemplary that translates mundane quality activities into skills for an embodied artificial cause (2021, November 3) retrieved 3 November 2021 from https://techxplore.com/news/2021-11-everyday-human-skills-embodied-artificial.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

English (US) ·

English (US) ·